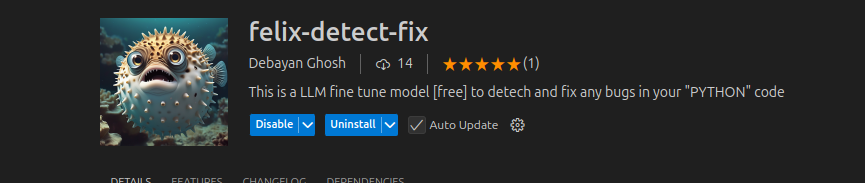

Getting Started with Felix-detect-Fix © Debayan Ghosh

What is felix-detect-fix? All right so have you ever written a tons of code but got fluked with that one simple line no 12 error. I got you and really it's a huge problem to open LLM to process your code and getting fixed. May be you are thinking about copilot, that is indeed good my tbh I dont have 10-20 USD to pay them. So here I code this huge project where anyone can use a product somewhere like copilot that can analyze your code and check that there is bug or not. if it has bug it can recommend you the patch. And according to our testing we have got more than 80% accuarcy. Now our second goal is building a model is good. but we know a huge amount of people they don't actually care about how the model works instead they want their job done easily. so I built an Vs code (visual studio code) extension for you where if you have a buggy code or there is a bug in your code that can be patched in place within a minute. and everything is completely without internet. So I built this as a task project in my industrial project at INTEL. So in this following documentation I will guide you how you can use my project felix-detect-fix. So Let' get started.

Tutorial - 1 Use our model in your notebook to use:

STEP - 1: If you want to run this on your local machine, make sure you have python installed in your machine. To check open terminal/powershell for windows or zsh/bash in macos or linux.

python // for windowspython3 // for macos or linuxPython 3.12.3 (main, Feb 4 2025, 14:48:35) [GCC 13.3.0] on linuxpip install jupyterlabpip install notebookjupyter notebook

from transformers import AutoModelForSequenceClassification, AutoTokenizer, AutoModelForCausalLM

import torch

import textwrap

,  you can manually download it but for you reference you can do it by-

you can manually download it but for you reference you can do it by-

model_name = "felixoder/bug_detector_model"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForSequenceClassification.from_pretrained(model_name)

bug_fixer_model = "felixoder/bug_fixer_model"

fixer_tokenizer = AutoTokenizer.from_pretrained(bug_fixer_model)

fixer_model = AutoModelForCausalLM.from_pretrained(bug_fixer_model, torch_dtype=torch.float16, device_map="auto")

STEP - 6: We will code a function that will just classify the code snippet with buggy or bug free ie, if the code contains bug then we will classify the code as buggy else we will classify the code as bug-free.

STEP - 6: We will code a function that will just classify the code snippet with buggy or bug free ie, if the code contains bug then we will classify the code as buggy else we will classify the code as bug-free.

def classify_code(code):

"""Classify input code as 'buggy' or 'bug-free' using the trained model."""

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.to(device) # Move model to the correct device

inputs = tokenizer(code, return_tensors="pt", padding=True, truncation=True, max_length=512).to(device)

outputs = model(**inputs)

logits = outputs.logits

predicted_label = torch.argmax(logits, dim=1).item()

return "bug-free" if predicted_label == 0 else "buggy"

def fix_buggy_code(code):

"""Generate a fixed version of the buggy code using the bug fixer model."""

prompt = f"### Fix this buggy Python code:{code} just give the fixed code nothing else### Fixed Python code:"

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.to(device)

inputs = fixer_tokenizer(prompt, return_tensors="pt").to(device)

with torch.no_grad():

outputs = fixer_model.generate(

**inputs,

max_length=256, # Reduce length for speed

do_sample=False, # Make it deterministic

num_return_sequences=1 # Only one output

)

fixed_code = fixer_tokenizer.decode(outputs[0], skip_special_tokens=True)

fixed_code = fixed_code.split("### Fixed Python code:")[1].strip() if "### Fixed Python code:" in fixed_code else fixed_code

return textwrap.dedent(fixed_code).strip()

# Example buggy code input

code_input = """

for in nge(0, 9

print(i)

if val > 12:

print("val {val} is greater")

else:

print("val {val} is less")

"""

# Classify the code using the fine-tuned model

status = classify_code(code_input)

if status == "buggy":

print("Buggy Code Detected")

fixed_code = fix_buggy_code(code_input)

print("Fixed Code:")

print(fixed_code)

else:

print("Bug-free Code")

Tutorial - 2 Use My EXTENSION in your VS code WITHOUT INTERNET

STEP - 1: Open Your vs code and Press CTRL/CMD + N to create a new python file name it something like test.py and save the file somewhere in your computer. Now go to your extension page (alternatively press CTRL/CMD + SHIFT + X) and search for felix-detect-fixNow install it in your machine.

STEP - 2: After Installation for your reference open a file [the same folder where the test.py is ] and name it run_model.py [most important please dont give another name] and paste this follwing code.

STEP - 2: After Installation for your reference open a file [the same folder where the test.py is ] and name it run_model.py [most important please dont give another name] and paste this follwing code.

import os

import sys

import torch

from transformers import (

AutoModelForCausalLM,

AutoModelForSequenceClassification,

AutoTokenizer,

)

from huggingface_hub import snapshot_download # Add this import

# Get absolute paths relative to THIS file

BASE_DIR = os.path.dirname(os.path.abspath(__file__))

MODEL_DIR = os.path.join(BASE_DIR, "models")

# Create model directory if it doesn't exist

os.makedirs(MODEL_DIR, exist_ok=True)

# Model configuration

MODELS = {

"detector": {

"repo": "felixoder/bug_detector_model",

"path": os.path.join(MODEL_DIR, "detector")

},

"fixer": {

"repo": "felixoder/bug_fixer_model",

"path": os.path.join(MODEL_DIR, "fixer")

}

}

# Download models if missing

for model in MODELS.values():

if not os.path.exists(model["path"]):

print(f"Downloading {model['repo']}...")

snapshot_download(

repo_id=model["repo"],

local_dir=model["path"],

local_dir_use_symlinks=False

)

# Now load the models

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

torch_dtype = torch.float16 if device.type == "cuda" else torch.float32

# Load detector model

detector_tokenizer = AutoTokenizer.from_pretrained(

MODELS["detector"]["path"],

local_files_only=True

)

detector_model = AutoModelForSequenceClassification.from_pretrained(

MODELS["detector"]["path"],

local_files_only=True,

torch_dtype=torch_dtype

).to(device)

# Load fixer model

fixer_tokenizer = AutoTokenizer.from_pretrained(

MODELS["fixer"]["path"],

local_files_only=True

)

fixer_model = AutoModelForCausalLM.from_pretrained(

MODELS["fixer"]["path"],

local_files_only=True,

torch_dtype=torch_dtype

).to(device)

def classify_code(code):

inputs = detector_tokenizer(

code, return_tensors="pt", padding=True, truncation=True, max_length=512

).to(device)

with torch.no_grad():

outputs = detector_model(**inputs)

predicted_label = torch.argmax(outputs.logits, dim=1).item()

return "bug-free" if predicted_label == 0 else "buggy"

def fix_buggy_code(code):

prompt = f"### Fix this buggy Python code:

{code}

### Fixed Python code:

"

inputs = fixer_tokenizer(prompt, return_tensors="pt").to(device)

with torch.no_grad():

outputs = fixer_model.generate(

**inputs, max_length=256, do_sample=False, num_return_sequences=1

)

fixed_code = fixer_tokenizer.decode(outputs[0], skip_special_tokens=True)

return (

fixed_code.split("### Fixed Python code:")[1].strip()

if "### Fixed Python code:" in fixed_code

else fixed_code

)

if __name__ == "__main__":

command = sys.argv[1]

code = sys.argv[2]

if command == "classify":

print(classify_code(code))

elif command == "fix":

print(fix_buggy_code(code))

models

|

|__detector

|__fixer

run_model.py

test.py